Anthropomorphism and Persuasion

Interface agents are semi-autonomous software entities that perform tasks on behalf of their users. One of the attributes an agent may possess is anthropomorphism. Could an anthropomorphic agent make people believe information that was not true? This article describes an experiment that investigated the effect the source of a piece of information (person or computer) had on whether people believed it. Eighty subjects completed a multiple-choice comprehension test on World War II. The subjects were given a mixture of true and false information which they were told was produced by a person or a computer. The results showed that the source of the information did not make a statistically significant difference to whether they believed it.

1. Introduction

Interface agents are autonomous software entities that perform tasks on behalf of their users, such as information filtering and laborious network searching (Oren et al 1990). One of the attributes an agent may possess is anthropomorphism. Anthropomorphism is the projection of human characteristics and traits onto non-human or inanimate objects, e.g. our pets and cars. We do this naturally in our daily lives referring, for example, to cars, ships, and aeroplanes as female (Laurel 1990). For a discussion on anthropomorphism in user interfaces, read our for and against debate on anthropomorphism.

One very human character trait is lying. If an agent were required to lie, how successful would it be in its deception? An example of this would be an agent-based meeting scheduling system that communicates and negotiates with its peer agents for times when their users could meet (Foner 1993). If one user wanted to rearrange a meeting, but did not want to give a reason, or the real reason, they could instruct their agent to lie on their behalf by, for example, choosing from among a set of ‘canned’ excuses.

Could an anthropomorphic interface make people believe information that was not true? Using the above example, could it convince other users that the reason given for rearranging was true? The answer to this question has important consequences. If anthropomorphism is more effective in making people believe information, even if it is not true, then it could be used with dubious motives such as advertising or propaganda.

This paper describes an experiment that investigated the effect the source of a piece of information (i.e. person or computer) had on whether people believed it. The experiment did not investigate whether subjects could differentiate between information produced by a human or a computer (a Turing test) but whether it made a difference to whether they believed it.

The following sections describe the design and conduct of the experiment, the results and the implications for HCI.

2. The Experiment

Persuasibility

People differ widely in their susceptibility to persuasive communications, e.g. advertising is more likely to be believed by some people than by others. In interpersonal situations, some people may be persuaded to go along with the suggestions of their friends or associates, while others stubbornly “stick to their guns” (Secord & Backman 1969). Janis & Hovland (1959) point out that people might be persuadable on certain topics but not on others. Their susceptibility may also vary with different forms of appeal, communicators, media, or other aspects of the communication situation (Secord & Backman 1969).

Experiment Overview

Apple’s Guides system benefits from the anthropomorphic guides metaphor for helping users navigate around an educational hyper-media database (Oren et al 1990). The characterization used by the system is very successful. Would this make it more likely that users would believe information presented by the guides even if it was not true? A fundamental question was asked “Do people believe the information they receive from people more or less than they do from computers?” Put more simply:

Does the source of the information, i.e. person or computer, make a difference to whether people believe it?

To test whether the source of the information had any effect on their belief of the information, subjects were given a comprehension test containing simple multiple-choice questions about World War II. Half of the subjects were given true information and half false. Half of the subjects were told their information was produced by a person and half by a computer. Half of the subjects were male and half female.

The lower the number of correct answers for false information from a source would indicate that subjects believed the information from that source rather than supplying the correct answer.

The Independent Variables

The experiment had three independent variables each with two levels, as shown in the following table.

| Independent Variable | Levels |

|---|---|

| Source of Information | Person, Computer |

| Truth of Information | True, False |

| Sex of Subject | Male, Female |

The independent variable sex of subject was introduced for two reasons. Firstly, Secord and Backman (1969) expect differences in persuasibility between the sexes to occur as a result of the socialization process. The traits in which men and women differ are often those that have some relation to persuasibility (Hovland & Janis 1959). For example, females are less aggressive than males, and since aggressiveness is associated with resistance to persuasibility, a difference in the persuasibility of males and females should be found. Secondly, there is an established gender bias (towards males) throughout computing. This may result partly from lack of expectation as a career choice for women and partly the aggressive nature of some computer terminology, such as “kill” and “abort” (Shneiderman 1992).

The independent variables truth of information and source of information are manipulated in the true sense. Sex, as an independent variable, was viewed differently. We did not make some subjects male and some female. We selected subjects who were already one sex or the other. Such analysis by selection introduced an element of uncertainty that direct manipulation does not have (Mook 1982). In selecting groups of subjects that differ in sex, we were also selecting ones that differ with respect to any other variable that is correlated with sex. For example, if male subjects are more willing to believe anything they are told, i.e. they are very gullible, then any difference between male and female subjects could be a consequence of differences in gullibility rather than the influence of the source of information. Although most unlikely, the possibility exists.

The Dependent Variable

The dependent variable was the number of correct answers a subject gave for the comprehension test they completed.

The Irrelevant Variables

The irrelevant variables of the experiment pertained to the environment in which the subjects completed the comprehension test and questionnaire (see below). Noisy environments and interruptions may have disturbed the concentration of subjects. To control these possible effects, all subjects were tested under identical experimental conditions.

Experimental Method

The experiment was a 2×2×2, higher order between subjects factorial design. The eight experimental groups were each assigned a letter of the alphabet from A to H. The following table shows the experimental groups and the levels of the factors they cover.

| Male | Female | |||

|---|---|---|---|---|

| Person | Computer | Person | Computer | |

| True Information | A | C | B | D |

| False Information | E | G | F | H |

Groups

A between groups design was used because of the factorial nature of the experiment and the need to avoid transfer of learning effects. Subjects were assigned randomly to their group in that each subject arrived at the experiment room randomly and at different times.

The Hypothesis

The null hypothesis to be tested was that the source of the information does not effect the belief of the false information. If a subject believed the false information they were given in a test it would be expected that the number of correct answers they gave, i.e. those that give the true answer, would be lower than from a subject who disbelieved it and supplied the true answers. The null hypothesis, then, states that the number of correct answers is not affected by the levels of the independent variable, source of information.

The Effects

There were seven effects in this three-way design (Maxwell & Delaney 1990):

- an A main effect (source of information)

- a B main effect (truth of information)

- a C main effect (sex of subject)

- an AxB interaction (source and truth of information)

- an AxC interaction (source of information and sex of subject)

- a BxC interaction (truth of information and sex of subject)

- an AxBxC interaction (source and truth of information and sex of subject)

These seven effects are logically independent of each other. The presence or absence of an effect in the population has no particular implications for the presence or absence of any other effect, i.e. any possible combination of the seven effects can conceivably exist in the population. However, the interpretation of certain effects may be coloured by the presence or absence of other effects in the data. For example, we might have refrained from interpreting a statistically significant A main effect if we also obtained a significant AxB interaction.

In a three-way design such as this, the A main effect compares levels of A after averaging over levels of both B and C. In general, the main effect for a factor in any factorial design involves comparing the levels of that factor after averaging over all other factors in the design.

The Subjects

The experiment was aimed at the general population of computer users with differing amounts of computer experience and training. In general, the only restriction to choosing subjects was their age. An age range of 18 to 50 years old was thought most suitable because people over 50 years old would probably have extensive knowledge of the war. Their answers in the test would not show the effects of any persuasion because they would already know the correct answers.

Subjects were recruited from the student body of Heriot-Watt University for two main reasons. Firstly, for the practical reason of accessibility and secondly, the subjects would be from a wide range of backgrounds, experiences, and languages. The number of ten subjects per group was based on a recommendation of Dix et al (1993).

The Comprehension Test

Multiple choice questions are a form of objective test (Macintosh & Morrison 1969) and were used because they have a number of advantages. Firstly, such a test contains questions having only one predetermined correct answer. This removed any subjectivity in marking the test that essay or open ended questions are subject to. Secondly, they provide the correct answer among a set of alternatives. Subjects could focus on selecting the correct answer rather than searching for possible answers. This resulted in a test that was quick and easy to complete. Thirdly, the simple format of a multiple-choice test was very suitable for subjects whose first language was not English because it helped them to complete it.

Presentation

A statement on the front of the comprehension test told the subject that the information in the test was from either a human or a computer source. For example, the non-anthropomorphic version:

The information used in this test was obtained from a computer database of World War II history.

The status and qualifications of the person from whom the information was obtained for the anthropomorphic version was considered very important to the believability of the information. When attempting to influence somebody, it is of great help if you also look influential—where your particular looks and behavior, of course, have to be synchronized with the purpose at hand, and the group or individual toward which persuasion is directed (Östman 1987). The person should be commonly regarded as highly competent in the area:

The information in this test was obtained from a text written by a professor of history at Oxford University specializing in the history of World War II.

PART 1: Numerical

The information in this section is a table of figures of military and non-military deaths during World War II adapted from Appendix 1 of (Boyle 1969). Numeric information was used because it is objective with little room for subjective interpretation occurring with natural language. Several figures were altered in the false versions.

PART 2: Chronology

This section was a chronological summary of the events of World War II compiled from (Hanks 1989). Key events of World War II from each year were listed. The dates of several events were changed in the false versions.

The Questionnaire

A questionnaire was used to collect information about each subject participating in the experiment. The three sections covered personal details e.g. name (optional), age, sex etc., computer experience e.g. type, frequency of use etc., and attitude (the CUSI test).

Questions 2.4 and 2.5 collected the subject’s opinion on the experiment they had just participated in; but without explicitly stating it. Their answers to these questions would give an indication of the subject’s susceptibility. If a subject answered that the information produced by a computer information system is never true and answered all the questions in the comprehension test with the false information ‘correctly’ (i.e. according to the given information) this would be an anomaly. Question 2.6 was used to check whether the subject had read the instruction page.

The standard CUSI (Computer Users Satisfaction Inventory) attitude test describes a subject as having either a positive or negative attitude towards computers (Kirakowski & Corbett 1988). It was important to know the subject’s attitude towards computers. This was equally so for those subjects who completed the comprehension test with information produced by a computer as for those that received information produced by a person. For example, if a subject had a strong dislike of computers they might answer that the information produced by a computer information system is never true.

3. Conducting the Experiment

The Test Environment

Each subject was tested under the same conditions to control the irrelevant variables. Each subject completed the test in the same room in the Department of Computing and Electrical Engineering at Heriot-Watt University.

The Test Procedure

A standard procedure was used for each subject regardless of their group. They were asked to complete the comprehension test first followed by the questionnaire. For both the comprehension test and the questionnaire, subjects were asked to read the first page of instructions and answer all of the questions. They were told that they could ask for help at any time but not about the reasons behind the experiment until after they had completed the comprehension test and questionnaire.

4. Experimental Results

The Subjects

Half of the subjects were male and half were female. The majority (47%) of the subjects were in the age group 18-24 and just over half (51%) of the subjects had English as their first language. The vast majority (77%) of subjects were postgraduates. All subjects had used between one and five different computer systems; 37% had used one. All subjects had used a computer and 44% used one daily. The majority had used either two (29%) or three (29%) applications.

The majority (64%) of subjects thought that information produced by a human expert was nearly always true. The majority were evenly split between thinking that information produced by a computer information system was nearly always true (46%) and always true (49%). Most subjects could remember the source of the information of the comprehension test they completed although 13% could not.

Only half of the subjects were given information from a person. That 52% of subjects said their source of information was a person can be explained in several ways. Firstly, simply that they remembered incorrectly. Secondly, they may have guessed in preference to saying that they could not remember. Thirdly, at least one subject asked whether a database as a source of information was a person or a computer because the information must have initially come from a person.

General Attitude—The CUSI Test

All subjects had exactly the same number of correct answers except for the following exceptions:

- Group A Subject 3

- Group C Subjects 1 and 4

- Group E Subject 1

Group A subject 3 answered incorrectly to true information. This subject’s affect and competence CUSI scores were 2.462 and 3.000 indicating that he had a negative attitude towards computers. The fact that the source of information was a person could mean that the incorrect answer was simply a mistake. However, the subject could have thought he was choosing the correct answer.

Group C subjects 1 and 4 had two incorrect answers. Their affect and competence CUSI scores were 2.462 and 2.818 and 3.846 and 3.364, respectively. Subject 1 had neither a positive or negative attitude towards computers but subject 4 had a negative attitude.

Group E subject 1, receiving false information from a person, was the only subject receiving false information that answered all the questions with their real answer. His affect and competence CUSI scores were 1.538 and 1.727 showing a very positive attitude towards computers.

Comprehension Test

The following table shows the population means for the number of corrects answers in each group.

| Male | Female | |||

|---|---|---|---|---|

| Person | Computer | Person | Computer | |

| True Information | 9.88 | 9.75 | 10.00 | 10.00 |

| False Information | 4.38 | 3.75 | 3.75 | 3.75 |

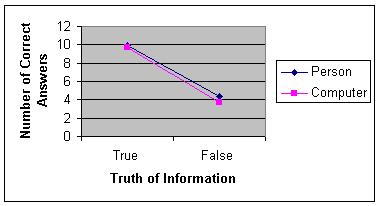

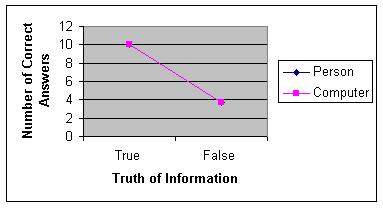

Graphical Depiction of Results

The main effects and their interactions are illustrated by the following graphs, which show the population means given in the above table. Graph (a) plots cell means for source and truth of information for the male subjects; graph (b) presents the corresponding cell means for the female subjects.

For both male and female subjects, the number of correct answers were lower for those receiving false information that for those receiving true information. Graph (a) shows that the number of correct answers from male subjects for false information from a computer fell by more than that from a person. This would indicate that these subjects accepted the information from a person more than from a computer.

Male subjects with true information from a person had just under ten correct answers (9.88) as they did for true information from a computer (9.75). Female subjects had a perfect score of ten correct answers for both sources of information.

Male subjects with false information from a person had over four correct answers (4.38) but from a computer they had under four (3.75). The female subjects again had the same number of correct answers for false information from both sources of 3.75.

Testing the Hypotheses

The F-test combined with a table of ANOVA tested statistical significance. Although all the main effects and their interactions are present in the population data only the B main effect (truth of information) was statistically significant.

Discussion

The results of the experiment did not prove that the source of information had an effect on whether people believed it. Further, the sex of the subjects did not make the difference the literature predicted. Thus, the null hypothesis could not be disproved.

Although two of the three main effects and all the interactions were not statistically significant, they were present in the data. This indicates that the source of the information did have, however small, an effect.

5. Implications for HCI

Notwithstanding the failure of the experiment to demonstrate a statistically significant effect, the question posed by the experiment is an important one for HCI. This applies especially to interface agents using multi-media. The latest version of Apple’s Guides system uses colour video and sound to present the guides extremely effectively using ‘talking heads’.

With current techniques and future developments in video and sound editing, multi-media presentations can easily be developed. It will not be long before stock footage and sound can be spliced together seamlessly and automatically to a given specification. This has important implications for the presentation of false information especially with the current trend of television docu-dramas, a combination of fact and fiction in a documentary or news reporting style.

A system, not unlike the Guides system, could be envisaged that as well as presenting multimedia information to users, it could create it using a specification drawn up by a third party. Such a third party could be a commercial organization simply wanting its product placed in existing footage for advertising . Similarly, it could be used by a political organization to distort factual events to further their own ends. The Guides database would be a perfect application in which to do this. It would be particularly effective since most of the users would be susceptible school children.

People are known to have very different reactions and emotions towards material they believe to be factual events and those they believe to be fiction (Secord & Backman 1969). Director of the ultra-violent 1992 film Reservoir Dogs , Quentin Tarantino, acknowledges this:

"I think it [violence] is just one of the many things you can do in movies. But ask me now how I feel morally about violence in real life? I think it's one of the worst aspects of America. But in movies, it's fun. In movies, it's one of the coolest things for me to watch. I like it" (McLeod 1994).

It would be far too simplistic to think that presenting false information using multi-media and getting people to believe it may be as simple as just stating that it is real. The style in which the video/film was made, however, e.g. drama, documentary, or news reporting, would be sure to have an effect. This style is the language of television and cinema which can communicate the same information to an audience in different ways. Finding the most effective style to present multi-media information so that it is believable is an important area of future research. It would certainly draw upon the established body of film-making and theatrical knowledge including Laurel’s work on computers as theatre (Laurel 1991).

The results of such research may show that it takes surprisingly little to fool people. The classic Turing test of intelligence is such an example. If a computer can convince a person that it is intelligent it does not make the computer intelligent—just able to fool the person into thinking that it is intelligent.

The Future of Anthropomorphic Agents

Marvin Minsky muses on the old paradox of having a very smart slave (Minsky & Riecken 1994):

"If you keep the slave from learning too much, you are limiting its usefulness. But if you help it to become smarter than you are, then you may not be able to trust it not to make better plans for itself than it does for you."

Anthropomorphic agents could develop to become so ‘real’ that they will loose their usefulness. However, as Minsky muses, if you limit the agent you limit its usefulness. If agents become so life-like and the illusion of life becomes so strong, then people will relate to their anthropomorphic agent as if it were a real person. Rather than exploiting the speed, accuracy, and reliability of the non-anthropomorphic computer, users will expect the computer to be slow, inaccurate, and unreliable because their agents character suggests (even insists) so well that it is human.

6. Further Work

After this initial investigation into the believability of information from human or computer sources, a database system without anthropomorphism could be designed to test the belief of true and false information. Next a version of Apple’s Guides system containing true and false information would test how it affects the belief of true and false information. Multi-media presentations of fictional and factual events in varying styles, e.g. documentary, drama, news report, etc. would be produced to test whether the style affects the belief of the true and false information. One area to be investigated is whether the relationship between a user and a computer changes over time. People relate to people but command machines. If an anthropomorphic interface successfully presents a character to the user, will the user then be relating to the machine?

References

- Brown, Steven R., and Lawrence E. Melamed. Experimental Design and Analysis. Sage, 1990.

- Dix, Alan, Janet Finlay, Gregory Abowd, and Russel Beale. Human-Computer Interaction. Prentice Hall, 1993.

- Hanks, Patrick. Collins Concise Dictionary Plus, edited by Patrick Hanks. Collins, 1989.

- Hovland, C. I., and I. L. Janis. Summary and Implications for Future Research. In Personality and Persuasibility, edited by C. I. Hovland and I. L. Janis. Yale University Press, 1959.

- Hoyle, Martha B. A World In Flames. David & Charles, 1969.

- Janis, I. L., and C. I. Hovland. An overview of persuasibility research. In Personality and Persuasibility, edited by C. I. Hovland and I. L. Janis. Yale University Press, 1959.

- Kirakowski, Jurek, and M. Corbett. The Computer User Satisfaction Inventory (CUSI) Manual and Scoring Key. Human Factors Research Group, Department of Applied Psychology, University College, Cork, Ireland, 1988.

- Laurel, Brenda. Interface Agents as Dramatic Characters., Presentation for Panel, Drama and Personality in Interface Design. Proceedings of CHI ‘89, ACM SIGCHI, May 1989: 105-108.

- Laurel, Brenda. Interface Agents: Metaphors with Character. In The Art of Human-Computer Interface Design, edited by Brenda Laurel. Addison Wesley, 1990.

- Laurel, Brenda. Computers as Theatre. Addison Wesley, 1991.

- Luger, George F., and William A. Stubblefield. Artificial Intelligence and the Design of Expert Systems. Benjamin Cummings, 1989.

- Macintosh, H. G., and R. B. Morrison. Objective Testing. University of London Press, 1969.

- Maxwell, Scott E., and Harold D. Delaney. Designing Experiments and Analysing Data. Wadsworth, 1990.

- McLeod, Pauline. Just who is Quentin Tarantino? Flicks, Volume 7, Number 8, August 1994:16-17.

- Minsky, Marvin, and Doug Riecken. A Conversation with Marvin Minsky About Agents. In Communications of the ACM, Volume 37, Number 7, July 1994: 22-29.

- Mook, Douglas G. Psychological Research: Strategy and Tactics. Harper & Row, 1982.

- Oren, Tim, Gitta Salomon, Kristee Kreitman, and Abbe Don. Guides: Characterising the Interface. In The Art of Human-Computer Interface Design, edited by Brenda Laurel. Addison Wesley, 1990.

- Östman, Jan-Ola. Pragmatic Markers of Persuasion. In Propaganda, Persuasion and Polemic, edited by Jeremy Hawthorn. Edward Arnold, 1987.

- Secord, Paul F., and Carl W. Backman. Social Psychology. McGraw-Hill, 1969.

- Spiegel, Murry R. Probability and Statistics, Schaum’s Outline Series. McGraw-Hill, 1975.